./ 2022

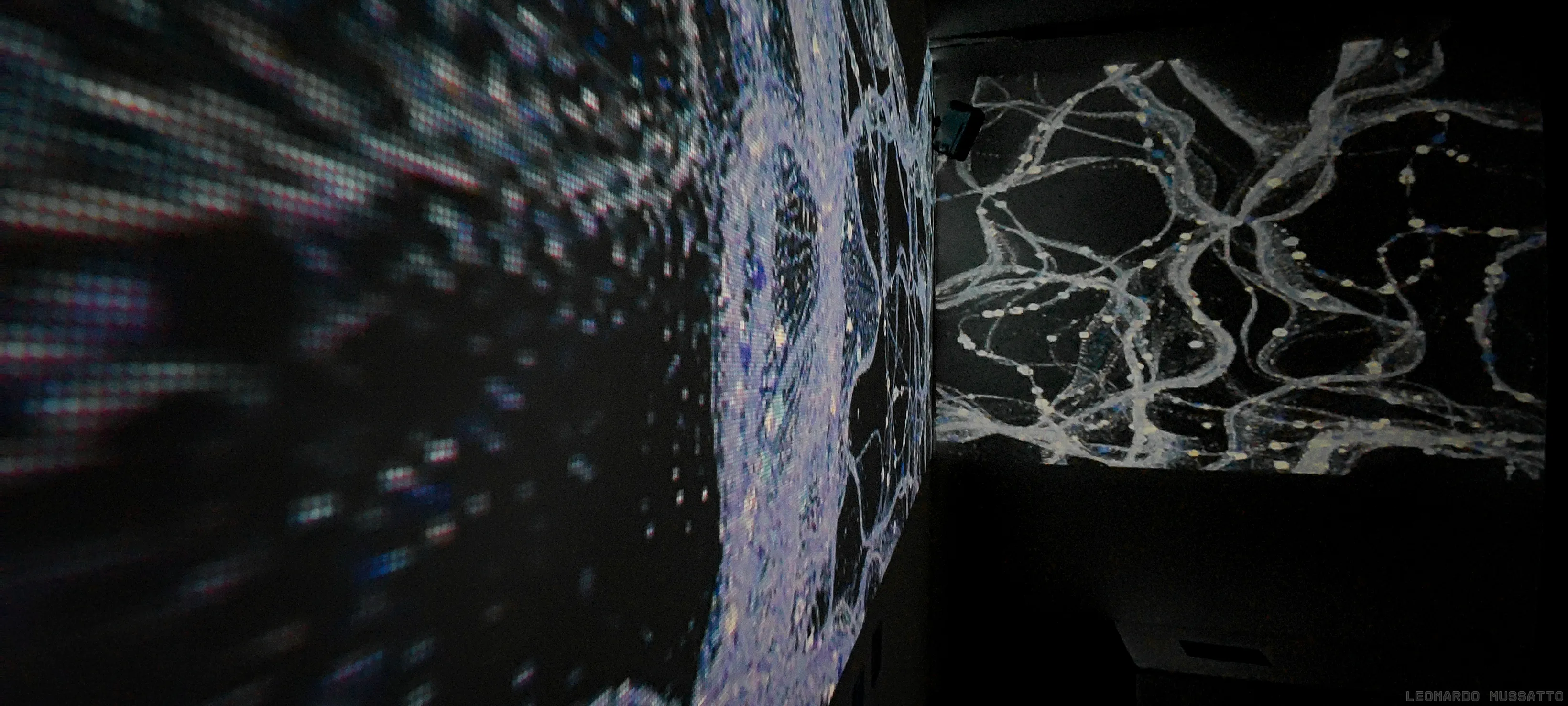

Systems’ Discourse

Fractals, mycelia, and the non-verbal languages of systems

Details

- Team

- Adél Szegedi

Creative Coder

- Katiya Ma

Creative Technologist

Leonardo Mussatto

Sound Designer & Creative Technologist

./ development

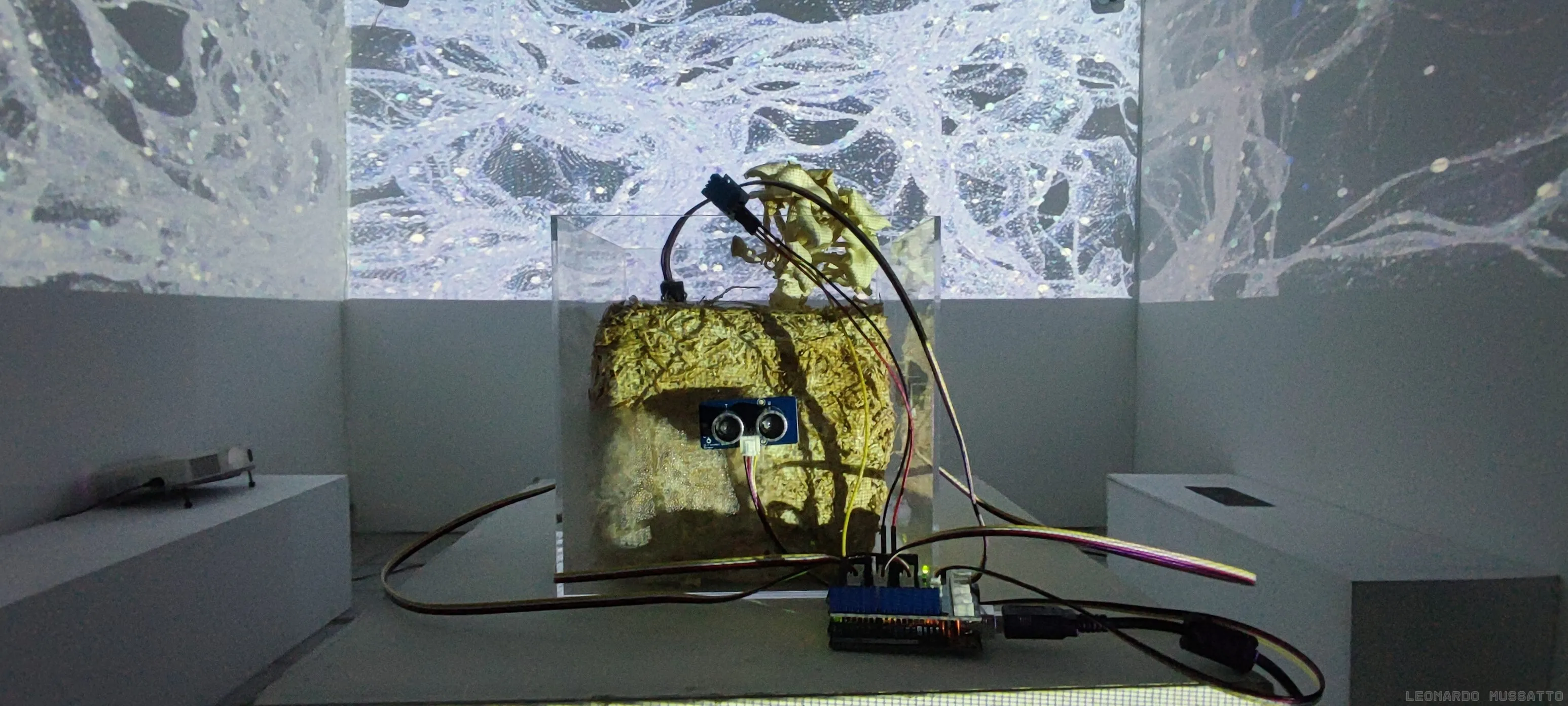

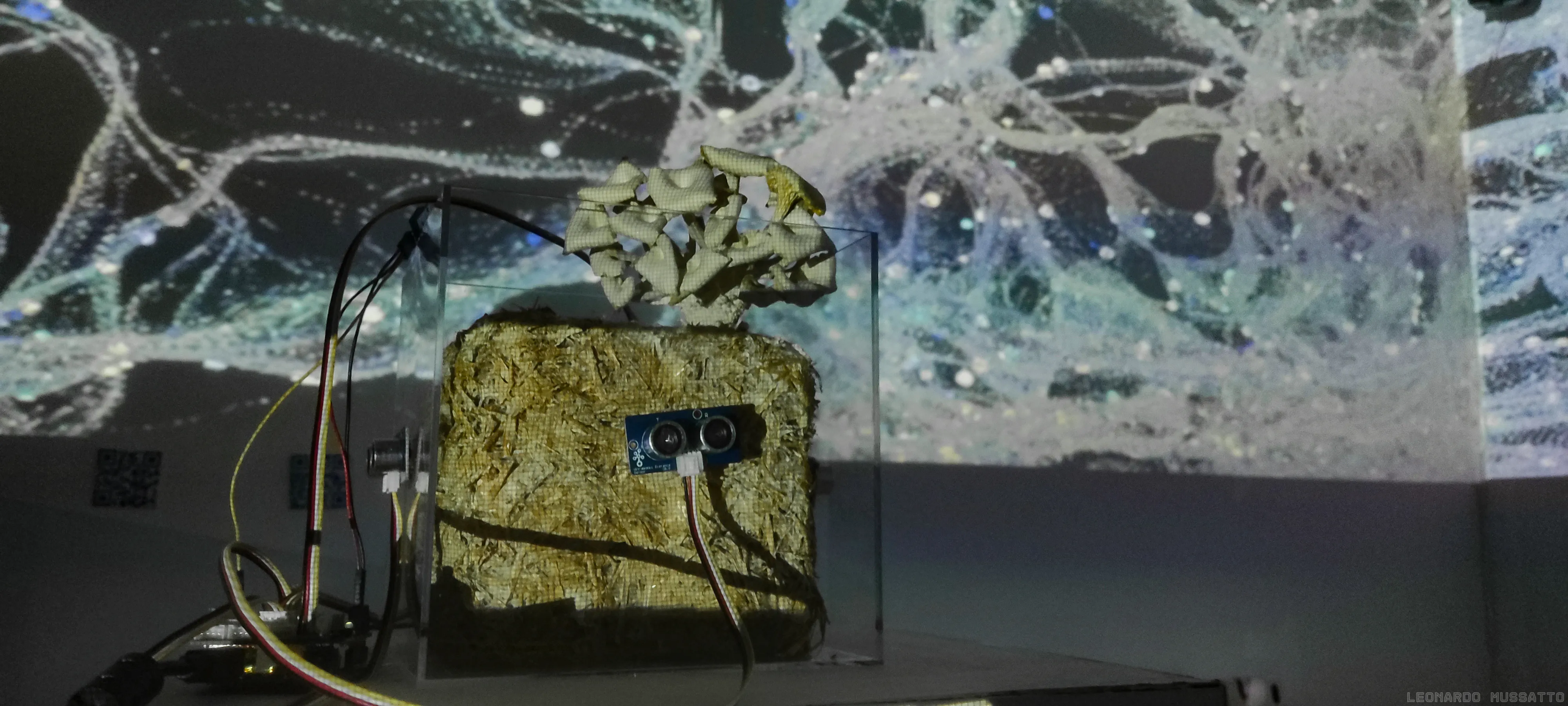

The Living System

Sensor Exploration

Our aim was to integrate a “living systems” - aside visitors - into the installation, and focus on how it would communicate and be influenced by the other systems at play. While our early experimentation involved plants - easily accessible on site - we soon moved to mushrooms, as they often take a principal role in coordinating the growth of forests thanks to their ability to communicate.

Reading meaningful data from living organisms, however, is rather complex. Plants and mushroom do exchange vital information, but they do so through the release of chemicals and delicate changes in electrical charges. Consumer grade sensors are usually unable to pick up such fine changes. Therefore, we instead opted to track environmental factors as they can greatly influence the growth and state of the organism and are often the result of complex systems’ interplay.

We experimented with the sensors available in our university “emergent media” lab and chose the subset that best matched the conceptual and practical constraints of the project. The main candidates and decisions were:

Light Sensors (Grove TSL2561 / TMG39931)

rejected: we intended to exhibit in a darkened room to preserve projector contrast, so light sensor had a limited significance

Air quality (Grove Multichannel Gas Sensor)

rejected: tests showed the selected mushroom samples produced negligible measurable changes detectable by this sensor

Air temperature & humidity (DHT11)

adopted: these are factors that would easily change during the span of the exhibition due to the presence of both visitors and projectors

Soil moisture probe (Grove Soil Moisture Sensor)

adopted: this sensor provided meaningful and usable readings

Impedance Sensors

rejected: impedance is influenced by the structure and integrity of the biological tissues, thus revealing nutrient deficiency, pathogen infection, and temperature stress for example. Connecting the Arduino board to the organism, however, is not enough to access meaningful data. This approach indeed requires performing proper Impedance Spectroscopy through specialized equipment and analysis

Ultrasonic distance sensors

adopted: to track visitors interaction with the organism and the installation, we opted for simple ultrasonic distance sensors as they could be easily integrated in our pipeline and provided us enough data to determine visitors distance from the mushroom.

./ development

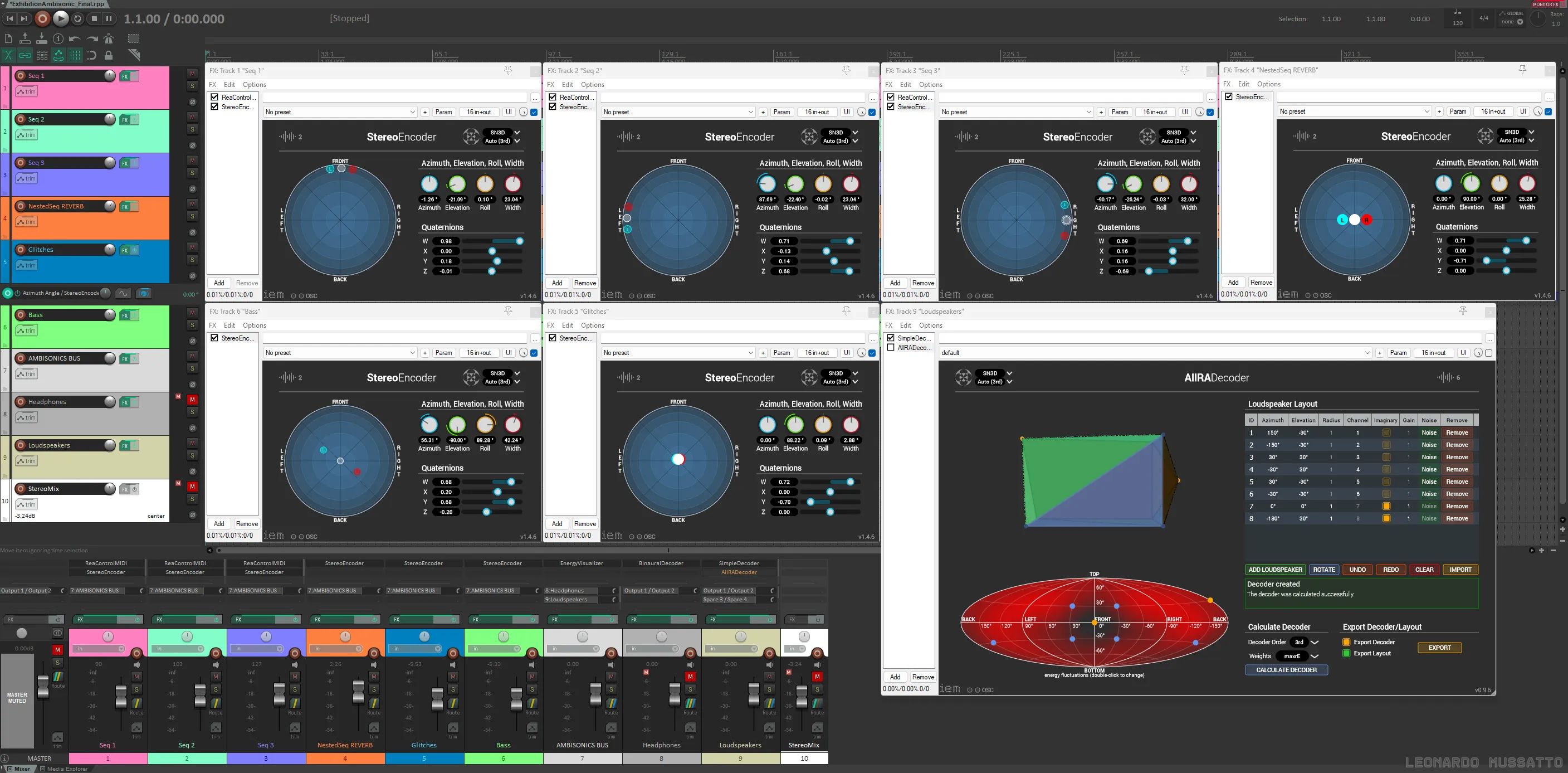

The Sound System

Patch Exploration

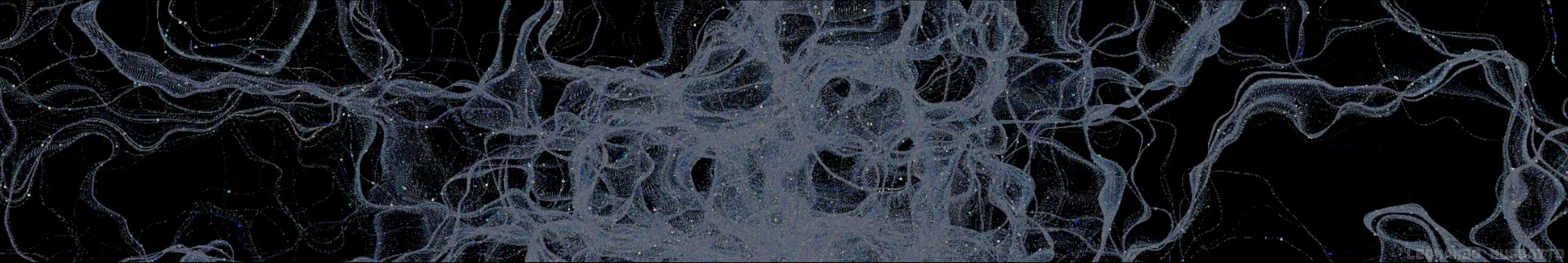

If the living system focused on picking up the often unnoticed effect systems have on each other when they share a space, the sonic system instead revolved around self-similar patterns, a characteristic of most living systems operated by communication. Therefore, aside being generative and fractal like, this system had to be able to converse with the other systems.

We decided to experiment with a virtual modular synthesizer built around modules inspired by fractals, chaotic functions, and physical modelling. To produce organic modulation and timbral richness, the resulting patch has three principal voices:

Slowly evolving low-end pad

- Source: Hora’s Detour (Vector Phase-shaping synth) sent in parallel to Prism’s Rainbow (spectral multiband resonator), Vult’s Tangents (Steiner-Parker style filter), and Bogaudio’s LFO+VELO (LFO controlled VCA)

- Modulation: Axioma Tesseract acts as the central source of modulation, controlling Detour’s inflection points, Rainbow’s filters resonance and level, and LFO frequency; Tesseract’s rotation parameters are in turn modulated by Hetrick Chaotic Attractors, air temperature, and relative humidity, while soil moisture controls Tangent’s drive; Chaotic Attractors also control Rainbow note rotation

- Result: rich sustaining texture modulated by non-periodic motion tied to temperature and humidity, so that room occupancy defines modulation rate, while soil moisture modulates low-end presence

Recurring layered bells

- Source: Axioma’s Rhodonea oscillator routed through three parallel paths gated by ZZC SC-VCA (soft-clipping VCA), then fed through Audible Instruments Resonator (modal resonator), AS Delay Plus Stereo, and FLAG Electric Ensemble; the tree voices are then combined and sent to Valley Plateau (plate reverb), Hetrick Contrast (brightening phase distortion) and AS Delay Plus Stereo

- Modulation: tree divisions of unless games tancor (binary tree shaped gate machine inspired by the cantor set) control gates and advancement of three identical but independent voltage sequences - set by Computerscare I Love Cookies -, which are then quantized and offset to three octaves before being sent to Resonators’ pitch input; two of the tancor’s divisions also drive independent SynthKit PrimeClock Dividers modulating Resonators’ damping

- Result: melodic lead element characterised by recursion and natural timbre, its complexity only revealed if the mushroom is approached from at least three sides at the same time

Glitches

- Source: Hora Soft Bell sent by Count Modula Switch to either Valley Plateau (plate reverb), NYSTHI Dissonantverb (dual pitch shifted plate reverb), NYSTHI Wormholizer (delay/reverb), AS Delay Plus, AS Super Drive (overdrive), Chowdsp ChowChorus, Chowdsp Warp (distortion), Alright Devices T-Wrex (bitcrusher/decimator), or Hetrick Bitshift. The parallel paths are then mixed together

- Modulation: dot appearance in Processing triggers Sha#bang Modules Collider - which in turn triggers Hora Soft Bell -, while dot size modulates Collider particles’ frequency and Soft Bell level; a SynthKit Fibonacci Clock Divider drives Count Modula Switch, defining the amount of time the signal is fed to each path

- Result: glitches based on the combination of fractals, physical modelling, and digital manipulation

To increase the sense of presence and immersion we experimented with sound spatialization. The five independent voices are thus streamed to Reaper where they are encoded into 3rd order Ambisonic and positioned in the virtual 3d stage using IEM StereoEncoders. Finally, they are merged and decoded to the custom 6 speaker layout through IEM AllRADecoder. By simulating the final result through binaural rendering - via IEM BinauralDecoder -, we were able to work on the soundscape before getting access to the location and available equipment, as well as to record multiple versions for documentation.

Processing Sketch → OBS → MadMapper

Since we composed the Processing sketch using P5Js, we lost the ability to directly stream the canvas as texture to MadMapper through Spout. In turn, we gained the ability to easily access the sketch from a another device on the network, thus allowing us to offset projection mapping to a second device by employing OBS browser source and obs-spout2 plugin to stream the texture to MadMapper

Processing Editor / VCVRack → OBS → MadMapper

To map the synth and processing editor and console on the plinth in real-time, we used OBS to grab the windows and share them through NDI using obs-ndi - now DistroAV - to MadMapper on the second device

./ outcome

Feedback

During assessment week, the installation was viewed by roughly ~30 visitors (peers and professors) and by a smaller number in subsequent days (~20). Visitors engaged with the installation first by tentatively walking around the space and approaching the mushroom, then - once they discovered their actions could affect the installation - by exploring the mapping through repeated approaches and retreats, and finally inviting companions to take other positions around the plinth. Others preferred to simply spend some time in the room, sitting on the benches lining the walls, suggesting the piece supported both solitary reflection and social interpretation.